How brain-computer interfaces are giving a voice back to people with paralysis

Paralyzed man speaks and sings using groundbreaking brain-computer interface

When someone loses the ability to speak because of a neurological condition like ALS, the impact goes far beyond words. It touches every part of daily life, from sharing a joke with family to simply asking for help. Now, thanks to a team at the University of California, Davis, there's a new brain-computer interface (BCI) system that's opening up real-time, natural conversation for people who can't speak. This technology isn't just about converting thoughts into text. Instead, it translates the brain signals that would normally control the muscles used for speech, allowing users to "talk" and even "sing" through a computer, almost instantly.

Sign up for my FREE CyberGuy Report

Get my best tech tips, urgent security alerts, and exclusive deals delivered straight to your inbox. Plus, you’ll get instant access to my Ultimate Scam Survival Guide - free when you join my CYBERGUY.COM/NEWSLETTER.

There's a new brain-computer interface (BCI) system that's opening up real-time, natural conversation for people who can't speak. (UC Davis)

Real-time speech through brain signals

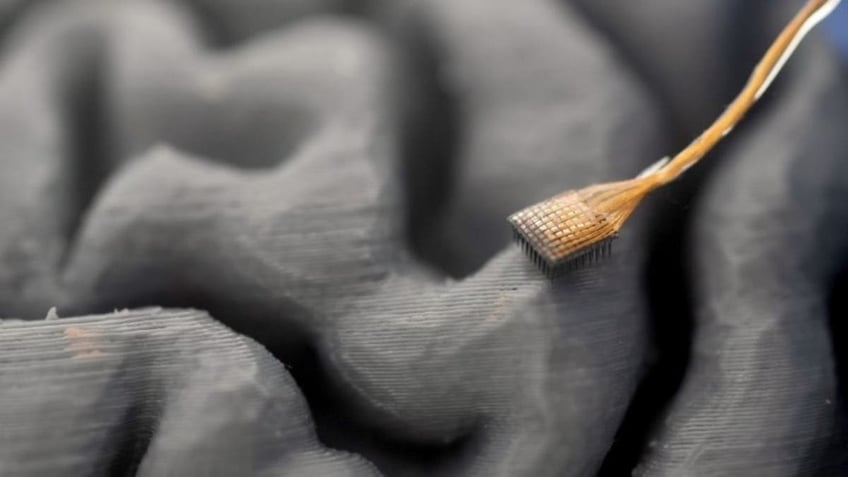

The heart of this system is four microelectrode arrays, surgically implanted in the part of the brain responsible for producing speech. These tiny devices pick up the neural activity that happens when someone tries to speak. The signals are then fed into an AI-powered decoding model, which converts them into audible speech in just ten milliseconds. That's so fast, it feels as natural as a regular conversation.

What's especially remarkable is that the system can recreate the user's own voice, thanks to a voice cloning algorithm trained on recordings made before the onset of ALS. This means the person's digital voice sounds like them, not a generic computer voice. The system even recognizes when the user is trying to sing and can change the pitch to match simple melodies. It can also pick up on vocal nuances, like asking a question, emphasizing a word, or making interjections such as "aah," "ooh," or "hmm." All of this adds up to a much more expressive and human-sounding conversation than previous technologies could offer.

It translates the brain signals that would normally control the muscles used for speech, allowing users to "talk" and even "sing" through a computer, almost instantly. (UC Davis)

How the technology works

The process starts with the participant attempting to speak sentences shown on a screen. As they try to form each word, the electrodes capture the firing patterns of hundreds of neurons. The AI learns to map these patterns to specific sounds, reconstructing speech in real-time. This approach allows for subtle control over speech rhythm and tone, giving the user the ability to interrupt, emphasize, or ask questions just as anyone else would.

One of the most striking outcomes from the UC Davis study was that listeners could understand nearly 60 percent of the synthesized words, compared to just four percent without the BCI. The system also handled new, made-up words that weren't part of its training data, showing its flexibility and adaptability.

The AI learns to map these patterns to specific sounds, reconstructing speech in real-time. (UC Davis)

The impact on daily life

Being able to communicate in real-time, with one's own voice and personality, is a game-changer for people living with paralysis. The UC Davis team points out that this technology allows users to be more included in conversations. They can interrupt, respond quickly, and express themselves with nuance. This is a big shift from earlier systems that only translated brain signals into text, which often led to slow, stilted exchanges that felt more like texting than talking.

As David Brandman, the neurosurgeon involved in the study, put it, our voice is a core part of our identity. Losing it is devastating, but this kind of technology offers real hope for restoring that essential part of who we are.

The UC Davis team points out that this technology allows users to be more included in conversations. (UC Davis)

Looking ahead: Next steps and challenges

While these early results are promising, the researchers are quick to point out that the technology is still in its early stages. So far, it's only been tested with one participant, so more studies are needed to see how well it works for others, including people with different causes of speech loss, like stroke. The BrainGate2 clinical trial at UC Davis Health is continuing to enroll participants to further refine and test the system.

The technology is still in its early stages. (UC Davis)

Kurt's key takeaways

Restoring natural, expressive speech to people who have lost their voices is one of the most meaningful advances in brain-computer interface technology. This new system from UC Davis shows that it's possible to bring real-time, personal conversation back into the lives of those affected by paralysis. While there's still work to be done, the progress so far is giving people a chance to reconnect with their loved ones and the world around them in a way that truly feels like their own.

As brain-computer interfaces become more advanced, where should we draw the line between enhancing lives and altering the essence of human interaction? Let us know by writing to us at Cyberguy.com/Contact.

Sign up for my FREE CyberGuy Report

Get my best tech tips, urgent security alerts, and exclusive deals delivered straight to your inbox. Plus, you’ll get instant access to my Ultimate Scam Survival Guide - free when you join my CYBERGUY.COM/NEWSLETTER.

Copyright 2025 CyberGuy.com. All rights reserved.

Kurt "CyberGuy" Knutsson is an award-winning tech journalist who has a deep love of technology, gear and gadgets that make life better with his contributions for Fox News & FOX Business beginning mornings on "FOX & Friends." Got a tech question? Get Kurt’s free CyberGuy Newsletter, share your voice, a story idea or comment at CyberGuy.com.