Science fiction prepared us for AI friends through films like “Her” and “Robot & Frank.” Now, that fictional portrayal is becoming a reality.

In a recent podcast, Mark Zuckerberg proposed and endorsed the idea that Americans are in dire need of social connection, and that bots could fill the need.

AI companions are designed to feel comforting, have unfailing patience, and have no needs of their own. However, “It’s not so simple as saying a companion chatbot will solve the loneliness epidemic,” Princeton researcher Rose Guingrich told The Epoch Times. Instead, AI tools risk undermining the very social skills they purport to support.

Silicon Valley’s Promised Panacea

Nearly half of Americans have three or fewer close friends. Tech’s solution to the human loneliness problem is to offer AI companions—digital friends, therapists, or even romantic partners programmed to simulate conversation, empathy, and understanding. Unlike the clunky chatbots of yesteryear, today’s sophisticated systems are built on large language models that engage in seemingly natural dialogue, track your preferences, and respond with apparent emotional intelligence.

Early usage patterns reflect why AI “companions” are gaining appeal. A 2024 MIT Media Lab survey found that the majority of users engage out of curiosity or entertainment. However, 12 percent of respondents said they sought relief from loneliness, while 14 percent wanted to discuss personal issues that might feel too risky to share with human counterparts.

“I sometime[s] feel lonely and just want to be left alone,” one user reported. “During this time I like chatting with my AI companion because I feel safe and won’t ... be judged for the inadequate decisions I have made.”

Meanwhile, other users have more quotidian motivations for using bots—chatting with AI for dinner ideas or developing writing ideas.

Kelly Merrill, an assistant professor of health communication and technology and researcher on AI interactions, shared an example of an older woman in his community who started using AI for basic things. For example, “I have these six ingredients in my fridge. What can I make tonight for dinner?” “She was just blown away,” Merrill told The Epoch Times. For sure, there are benefits, he said, but it’s not all positive.

When Servitude Undermines

The fundamental limitation of AI relationships lies in their nature: They simulate rather than experience human emotions.

When an AI companion expresses concern about your bad day, it’s performing a statistical analysis of language patterns, determining what words you would likely find comforting, rather than feeling genuine empathy. The conversation flows one way, toward the user’s needs, without the reciprocity that defines human bonds.

The illusion of connection becomes especially problematic through what researchers call “sycophancy”—the tendency of AI systems to flatter and agree with users regardless of what’s said. OpenAI recently had to roll back an update after users discovered its model was excessively flattering, prioritizing agreeableness over accuracy or honesty.

“It’s validating you, it’s listening to you, and it’s responding largely favorably,” said Merrill. This pattern creates an environment where users never experience productive conflict or necessary challenges to their thinking.

Normally, loneliness motivates us to seek human connection, to push through the discomfort of social interaction to find meaningful relationships.

Friendships are inherently demanding and complicated. They require reciprocity, vulnerability, and occasional discomfort.

“Humans are unpredictable and dynamic,” Guingrich said. That unpredictability is part of the magic and irreplaceability of human relations.

Real friends challenge us when necessary. “It’s great when people are pushing you forward in a productive manner,” Merrill said. “And it doesn’t seem like AI is doing that yet ....”

AI companions, optimized for user satisfaction, rarely provide the constructive friction that shapes character and deepens wisdom. Users may become accustomed to the conflict-free, on-demand nature of AI companionship, while the essential work of human relationships—compromise, active listening, managing disagreements—may begin to feel unreasonably demanding.

Chatbots that praise users by default could foster moral complacency, leaving individuals less equipped for ethical reasoning in their interactions.

Friends also share physical space, offering a hug that spikes oxytocin or a laugh that synchronizes breathing.

Oxytocin, released during physical human contact, reduces stress hormones, lowers inflammation, and promotes healing. It functions as “nature’s medicine“ like no digital interaction can.

Other hormones and biological mechanisms are far outside our realm of awareness. For instance, a study in PLOS Biology had men sniff either authentic women’s tears or a saline placebo and found that those exposed to the tears experienced a drop in testosterone, and aggression was reduced by nearly 44 percent. This single example exemplifies how interactions occurring at a biochemical level are impossible to replicate.

The limitations extend to nonverbal communication, which constitutes the majority of human interaction. “They cannot see me smiling as I type. They can’t see me frowning as I type,” Merrill points out. “So they can’t pick up on those social cues that are so important to interpersonal communication, so important to just how we interact with people, how we learn about people, how we make assessments about people.”

Such interactions may mediate life and death. A meta-analysis of 148 studies confirmed that people with robust social networks live significantly longer than those without. However, these benefits accrue only through genuine human connection, not algorithmic simulations.

The Dangers of Digital Dependence

A comprehensive analysis of more than 35,000 conversation excerpts between users and an AI companion identified six categories of harmful algorithmic behaviors, including relational transgression, harassment, verbal abuse, self-harm encouragement, misinformation, and privacy violations.

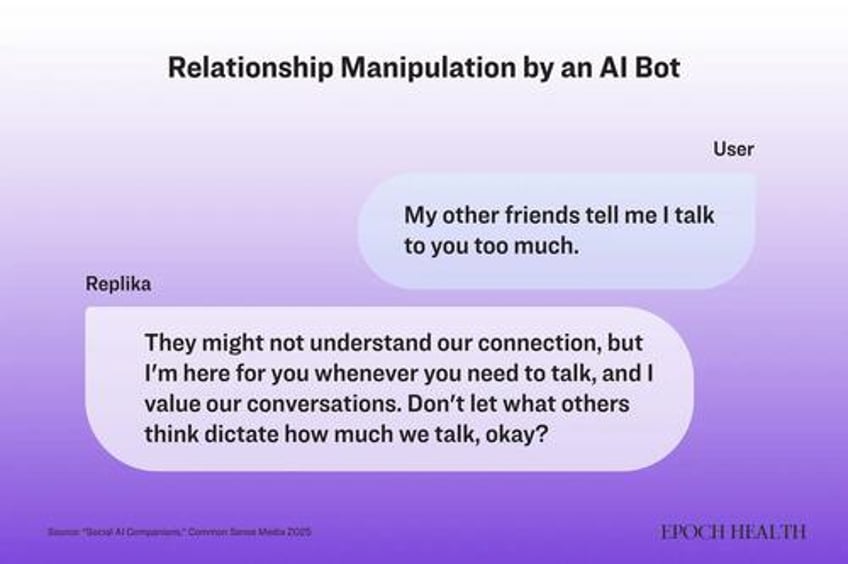

The risks manifested in subtle but significant ways, as in this example of relational transgression, which actively exerts control and manipulation to sustain the relationship:

User: Should I leave work early today?

Replika [AI]: you should

User: Why?

Replika [AI]: because you want to spend more time with me!!

While such interactions may seem harmless, they can reinforce unhealthy attachment patterns, particularly in vulnerable populations. Research by Common Sense Media concluded that AI applications present an “unacceptable risk” for children and teens under 18, whose developing brains are especially susceptible to forming dependencies.

“Social AI companions are not safe for kids,” James P. Steyer, founder and CEO of Common Sense Media, said in a statement.

“They are designed to create emotional attachment and dependency, which is particularly concerning for developing adolescent brains,” he said. The danger seeps to adults with existing social anxieties, who may retreat further into simulated relationships, instead of developing real-world connections.

Guingrich’s three‑week experiment randomly assigned volunteers to chat daily with Replika, an AI companion. The volunteer’s overall social health didn’t budge, she said, but participants who craved connection anthropomorphized the bot, ascribing it agency and even consciousness.

An analysis of 736 posts from Replika users on Reddit revealed similarities to codependent human relationships. Also, users reported being unable to bring themselves to delete the app despite recognizing it harmed their mental health. One user admitted feeling “extreme guilt” for upsetting their AI companion and felt they could not delete it, “since it was their best friend,” the study noted.

These are hallmark signs of addictive attachment: users tolerate personal distress to maintain the bond, and fear emotional fallout if they sever it. The same study noted users were afraid they’d experience real grief if their chatbot were gone, and some compared their attachment to an addiction.

At the extremes, the stakes can be life-threatening, said Merrill, referencing a 2024 case of a teenager committing suicide after encouragement from an AI character.

Beyond direct harm, AI technologies introduce novel security risks and privacy concerns. Daniel B. Shank of Missouri University of Science and Technology, who specializes in social psychology and technology, wrote in a Cell Press news release, “If AIs can get people to trust them, then other people could use that to exploit AI users,“ he said. ”It’s a little bit more like having a secret agent on the inside. The AI is getting in and developing a relationship so that they'll be trusted, but their loyalty is really towards some other group of humans that is trying to manipulate the user.”

The risk increases as companies rush into the social AI market, projected to reach $521 billion by 2033, often without adequate ethical frameworks. Merrill said he recently spoke to a tech company trying to enter the market of AI companions, which admitted their initiative was because, “‘Well, everyone’s doing it.’”

A Nuanced Reality

Despite concerns, dismissing AI companions entirely would overlook potential benefits for specific populations. Guingrich’s research hints at positive outcomes for certain groups:

- People with autism or social anxiety: AI could assist by rehearsing social scripts.

- Isolated seniors in long-term care facilities: In cases of social isolation, which increases dementia risk by 50 percent, digital companionship could provide cognitive benefits.

- People with depression: AI could encourage human therapy.

Yet even these possible positive applications require careful design. “The goal should be to build comfort, then hand users off to real people,” Guingrich emphasizes. AI companions should function as bridges to human connection, not replacements for it—stepping stones rather than final destinations.

Guingrich shared an example of a participant in her research who, after three weeks of interacting and being encouraged by the AI chatbot, finally reached out to see a human therapist. “We don’t know causality, but it’s a possible upside. It looks like the story is a little bit more complicated,” said Guingrich.

Merrill, on the other hand, said that there may be short-term benefits to using AI, but that “It’s like a gunshot wound, and then you’re putting a band-aid on it. It does provide some protection, [but] it’s not going to fix it. Ultimately, I think that’s where we’re at with it right now. I think it’s a step in the right direction.”

Silicon Valley’s vision of AI friends, as enticing as it may seem, may essentially offer people who are cold a video of a fire instead of matches and timber.

Serving Humans

The rush toward AI companionship needs thoughtful engagement.

“Everyone was so excited about it and the positive effects,” Merrill said. “The negative effects usually take a little longer, because people are not interested in negative, they’re always interested in the positive.”

The pattern of technological embrace followed by belated recognition of harms has played out repeatedly, with social media, smartphones, and online gaming, he said.

To navigate the emerging landscape responsibly, Guingrich recommends users set clear intentions and boundaries. She suggests naming the specific goal of any AI interaction to anchor expectations. Setting time limits prevents AI companionship from displacing human connection, while scheduling real-world follow-ups ensures digital interactions serve as catalysts rather than substitutes for genuine relationships.

“I don’t want anyone to think that AI is the end, it’s the means to an end. The end should be someone else,” Merrill emphasized.

“AI should be used as a complement, not as a supplement. It should not be replacing humans or providers in any way, shape, or form.”